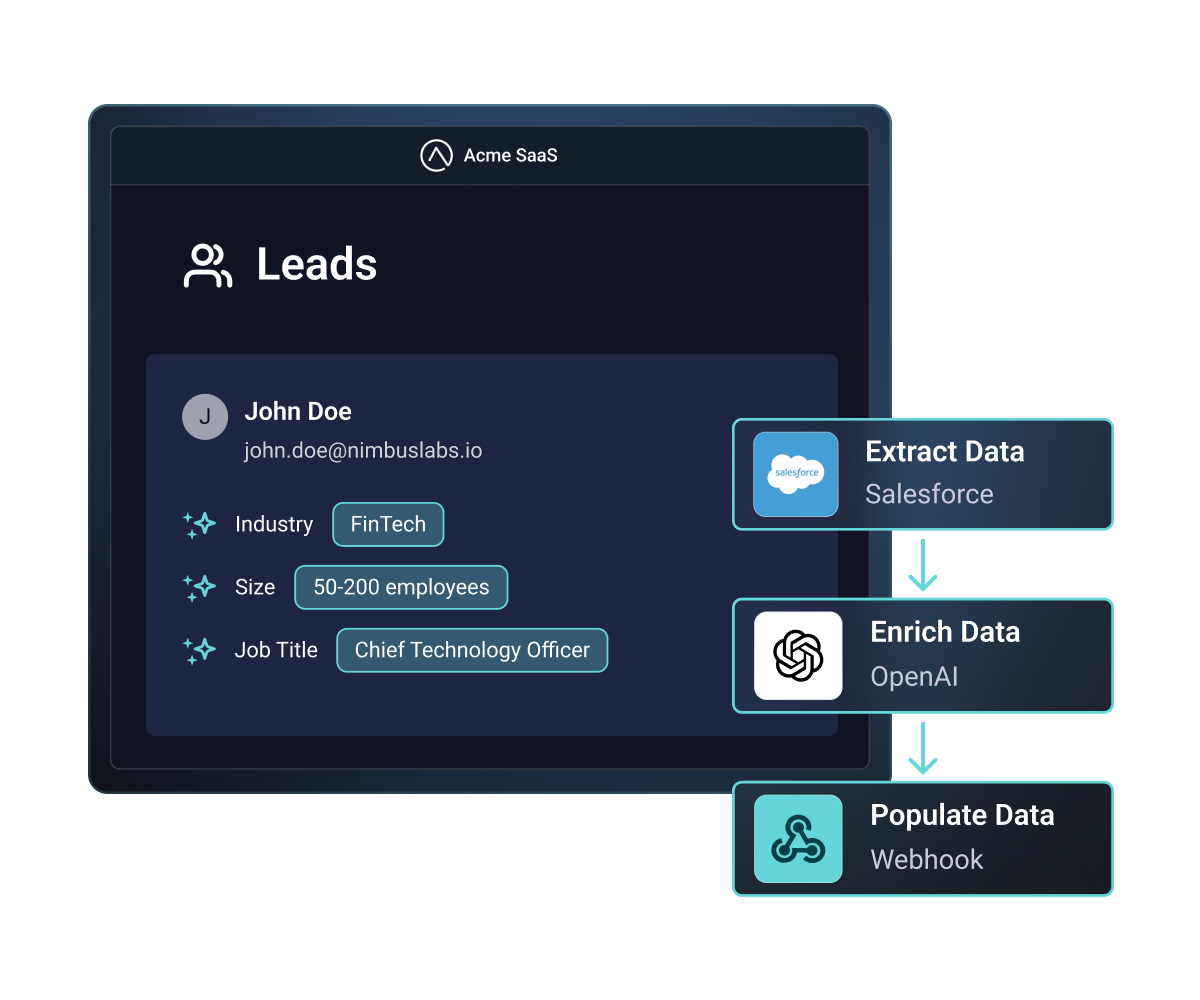

Add AI Capabilities to Integrations

Add intelligence to any workflow

Work with leading AI services

Supported AI services

- OpenAI

- Anthropic Claude

- Google Gemini

- Azure OpenAI

- Custom models via API

- And more

Enterprise-ready AI workflows

Multi-tenant credential management

Each customer can use their own AI service API keys, or you can provide shared credentials. Full isolation and cost attribution.

Error handling and retries

Built-in retry logic and graceful degradation when AI services are unavailable or rate-limited.

Prompt management

Version-controlled prompts that can be updated without redeploying integrations. Test and refine AI behavior safely.

Full observability

Monitor AI component performance, token usage, and costs. Debug with complete execution logs.

Rate limiting and cost controls

Set guardrails to prevent runaway costs. Monitor and alert on AI service usage per customer.

Compliance and security

SOC 2 Type II, GDPR, and HIPAA compliant infrastructure ensures AI workflows meet enterprise security standards.

Multi-tenant credential management

Error handling and retries

Prompt management

Full observability

Rate limiting and cost controls

Compliance and security

Don't take our word for it

Common questions

LLM connectors are components you add to integration workflows, just like any other connector. Configure the AI service, set up your prompt, and the component handles API calls, authentication, and response parsing automatically.