Integrations Overview

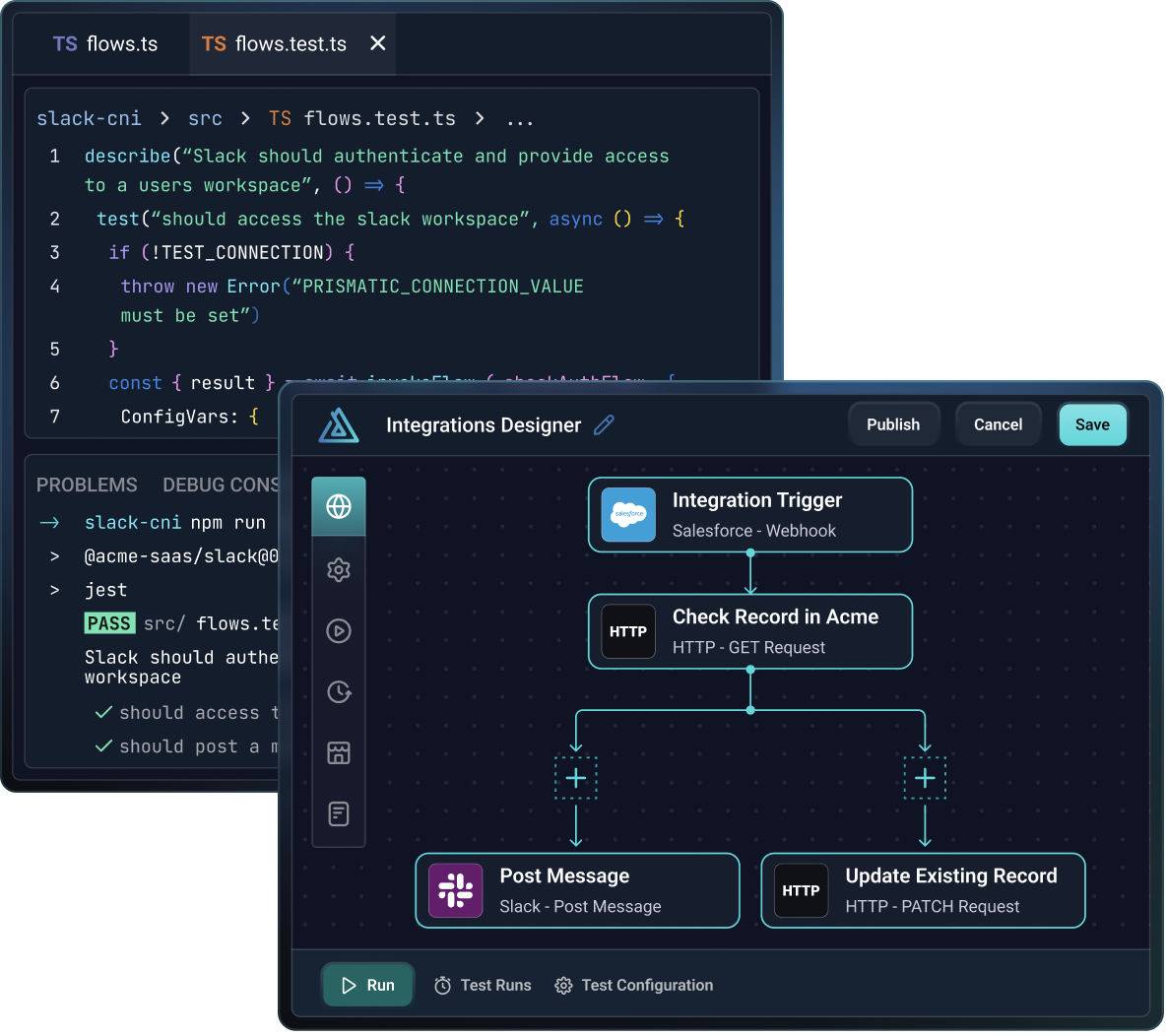

When you build an integration, you can build it in the low-code designer, or as a TypeScript project in your favorite IDE. We call an integration built with code a code-native integration (or CNI).

An integration built with the low-code builder consists of a series of steps that execute one after another in series. Each step runs an action - a small bit of code designed to perform a specific task. Actions can be things like "HTTP - GET" to fetch the contents of a webpage from the internet, or "Amazon S3 - Put Object" to save a file to Amazon S3. You can use a combination of actions from common built-in components and your own custom components to build an integration.

A code-native integration is a set of flows (functions) written in TypeScript that run when a trigger fires.

An integration is started when its trigger fires. Triggers can either follow a schedule, or can be invoked via a webhook URL.

Integrations should be developed to be configuration-driven, so they can be deployed to multiple customers with potentially different configurations. That is accomplished by leveraging config variables, and have configuring steps to reference those variables.

Some integrations have a single flow. That is, they have a single trigger that fires, and a set of steps that are executed one after another. Prismatic also supports grouping multiple related flows together into a single deployable integration. For example, if you have a third-party service (Acme ERP) that sends data via a variety of webhooks to your integrations, it probably makes sense to have a single Acme Corp integration that you or your customers deploy that is made up of several logical flows. Each flow has its own trigger, though they all share config variables.

When an integration is completed, it can be published. Customers can then enable the integration for themselves through the embedded integration marketplace, or your team members can deploy an instance of the integration on the customer's behalf. Regardless of who enables an instance of the integration - your team member or your customer - the person deploying the instance configures the instance with customer-specific config variables.

We recommend that you follow our low-code Getting Started tutorials to first acquaint yourself with integration development and deployment.

Low-code vs code-native

The Prismatic low-code designer and code-native SDK are both great tools that you can use to build, test and deploy integrations. When using the low-code designer, you build integrations by adding triggers, actions, loops and branches to a canvas. When using the code-native SDK, you write TypeScript code to define your triggers and flow logic.

Depending on your team structure, technical expertise, and the complexity of the integration you are building, you may choose to use one or the other. Let's look at a quick comparison, with more detail below:

| Topic | Low-Code | Code-Native |

|---|---|---|

| Build method | Integration builders add triggers, flows and steps to an integration using the low-code integration builder. | Integration builders write triggers and integration logic in TypeScript using the code-native SDK. |

| Flows | A low-code flow is a sequence of steps that run in a specific order specific order. | A Code-Native flow is a JavaScript function that executes when the flow's trigger is invoked. |

| Config Wizard | Config wizards are built within the low-code builder. | The config wizard is defined in TypeScript using the code-native SDK. |

| Testing | Integrations are tested from within the integration designer. | Integrations can be tested within Prismatic, or locally through unit tests |

| Step results and logs | Step results for each step are collected and stored and can be viewed later | Code-native integrations are "single-step", and logging can be used for debugging |

| Best fit for | Hybrid teams of developers and non-developers | Highly technical teams who prefer code |

When to use low-code vs code-native

You may want to reach for the low-code designer when:

- Your team is looking to save dev time and has non-dev resources that are technical enough to build integrations.

- It is important to your non-developer team members to have a visual representation of the integration.

- You would like your customers to build their own integrations using embedded designer.

You might want to use code-native when:

- You have a highly technical team that is comfortable writing TypeScript.

- Your integration requires complex logic that is easier to write in code.

- You want to unit test entire integrations rather than individual actions and triggers.

- You want to use a version control system to manage your integration code.