As a B2B software company, standing up and maintaining infrastructure to run your customers' integrations seems simple at first. You wrote a program that transforms and moves data from point A to point B, and now you just need to run the program somewhere, right?

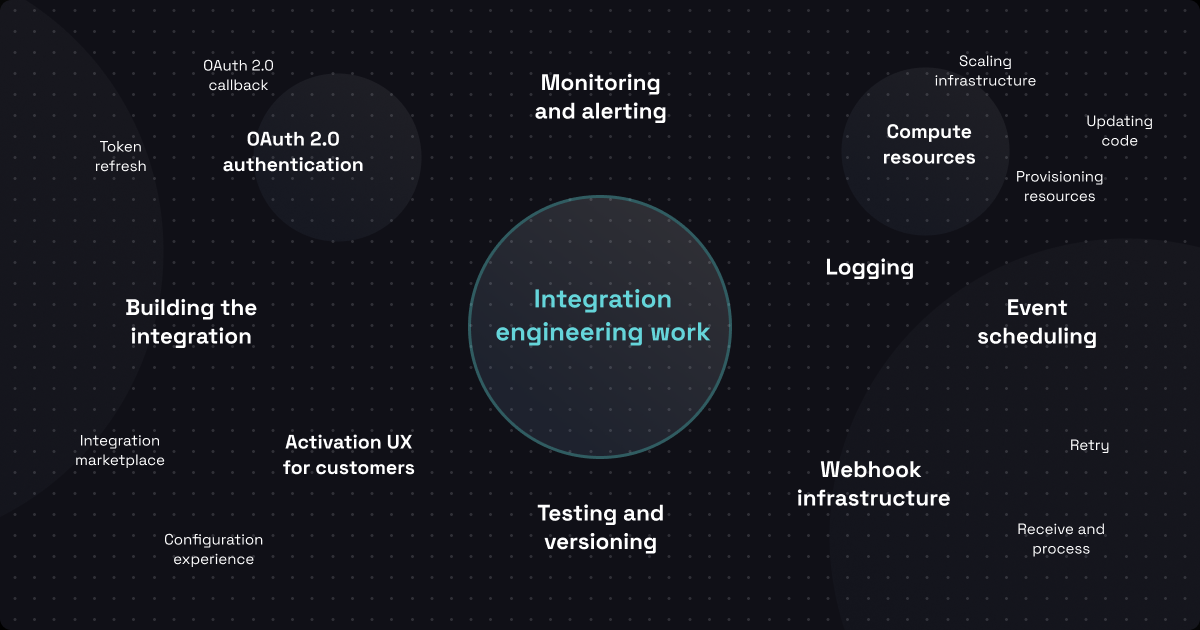

It's unintuitive, but writing the code for an integration is only a small part of integration development. Provisioning and configuring infrastructure to run your integration can take as much (or more) time as writing the integration itself. You need to consider a bunch of things with regards to infrastructure:

- What will your integrations run on (serverless functions, containers, cloud servers, virtual servers, physical servers, etc.)?

- How do you provision and configure those systems so they're ready to run your integrations?

- How readily can you configure and deploy the integrations themselves, accounting for third-party credentials and configuration differences between customers?

- Who handles updates and patching of these systems? What happens when infrastructure fails?

- Does your solution scale well as integration load increases?

Having spent nearly a decade working on infrastructure, integrations, and the intersection of the two, this is a topic I'm passionate about. In this post, I'll dive into these integration infra considerations in more detail. I'll go over some pain points of building and maintaining integration infrastructure yourself, and look at why choosing an embedded integration platform as a service (iPaaS) like Prismatic that handles infrastructure for you is almost certainly the right choice.

Deciding where your integrations run

The first thing you need to decide is where to run your integrations. I've run integrations on everything from serverless platforms to on-prem hardware. Many SaaS teams spin up virtual servers within a cloud provider like AWS, GCP, Oracle Cloud or Azure, spin up some containers in a managed container service or within Kubernetes, or run integrations on a serverless platform like AWS Lambda or Azure Functions. If your product runs on physical on-prem servers, you could run your integrations on those servers or on virtualized servers that share the same hardware.

Provisioning and configuring systems

Once you decide where your integrations will run, you'll need to figure out how to provision the compute power you need. For physical servers, that's a highly manual and physical process. For virtual servers, serverless, or containerized integrations you can reach for infrastructure orchestration tools like Terraform or CloudFormation paired with other tools that help you package your code into containers.

Once you've provisioned your resources, you'll also need to configure/prepare them to run your integrations. Your integrations have requirements (dependencies) – for example, an integration might need a specific version of Python installed as well as a series of Python libraries. For physical, virtual, or cloud-based servers you can use a configuration management tool like Ansible or Chef to ensure those dependencies are installed. For containerized integrations you'll use some combination of Dockerfiles and bash scripts. If you're running within a serverless environment, your build pipeline will need to package up those dependencies into your zipped build artifacts, and you'll need to make sure that whatever you zipped up is compatible with the serverless runtimes you choose to run.

Finally, you'll need to figure out a way to get your integrations themselves to the systems they'll run on. Creating and shipping build artifacts usually involves some combination of Ansible, Chef, Terraform, CloudFormation, Docker registries, and, in all likelihood, a series of bash scripts.

The provisioning and configuring of your integration infrastructure will take a skilled DevOps team hours to prepare, and even more time to manage. These are hours you aren't spending on development of your core product, or even on integrations themselves.

That's why, when we set out with Prismatic to make integrations easier for B2B software teams, we treated infrastructure as a critical part of the platform and made sure that you don't need to worry about any of this. We chose to run your integrations on elastic cloud-based compute resources so they run in scalable, isolated environments that are configured specifically to run Prismatic integrations. Your developers use our TypeScript SDK to any write industry-specific connectors or components you might need. Your integration builders assemble and productize your integrations using a combination of your custom components and built-in components. And anyone from your company (or your customers themselves) can hit the "deploy" button to activate an integration – there's no provisioning or configuring of underlying compute required!

Additionally, you'll need to consider logging, alerting, and monitoring of your integrations, and the infrastructure that supports all of that. What happens if an integration fails to run – how do you get notified? Where do your integrations' logs go? Do you pay for Splunk or DataDog, or spin up your own ELK stack? Doing that presents another whole set of infrastructure provisioning and configuration challenges. Prismatic handles all of those things – logging, monitoring, alerting and much more – for you.

Configuring your integrations

Now that you have an environment to deploy integrations into, your next task is to find a way to handle configuring the integrations for individual customers. Your customers' environments differ from one another – sometimes subtly, sometimes significantly. They might have different credentials that they use for third party services, or different endpoints to hit. They might also choose to store their data in different places – for instance one customer might use a MSSQL database, while another might use PostgreSQL. Your integrations need to be able to account for these differences, and you usually do that through configuration variables that are set during the deployment of the integration.

Each customer's unique configuration has to be stored somewhere. If you're managing integration infrastructure yourself, there are plenty of configuration management / inventory systems available to you – you can use HashiCorp's Vault to drive Terraform, or an in-house dynamic inventory to feed Ansible. Whatever you choose, you'll need to make sure that your solution scales with your customer base, and that sensitive config variables (like credentials to third-party services) are stored securely.

This is another place where Prismatic handles everything for you. When your integration builders create an integration in Prismatic, they design a configuration experience for whoever will be deploying the integration. Integrations are productized – that is, your integration builders package integrations in Prismatic as sellable, deployable things. When a customer needs an integration, deployment is simple – either your team or your customer simply chooses an integration to deploy, enters a few configuration variables and credentials, and they're good to go. We handle storing configuration and credentials securely, as well as other common integration-related tasks for you, like managing the OAuth2 flow so you don't need to spin up callback endpoints or handle customer credentials yourself.

Patching, maintenance, and infrastructure failures

At this point, your integrations have been configured and are running on some sort of compute system that you've provisioned and configured. Awesome. You're done, right?

Not so fast – what about routine patching and maintenance? Depending on what compute infrastructure you chose to use, you'll likely need to patch servers as new bugs and CVEs are addressed. If you're using physical or virtualized servers, that might entail downtime which you need to communicate to your customers, and will require someone from your systems or DevOps teams to babysit servers while they are patched. As someone who has babysat many server upgrades over the years, I can guarantee that this will cost you engineering time that is far better spent elsewhere.

If you chose to run your integrations within a container service or serverless platform you can probably get away with near-zero downtime – you can simply spin up patched containers, and shut the unpatched ones down – but you still need someone to write and babysit some Ansible or Terraform scripts to make sure your upgrades deploy successfully.

When planning out your infrastructure, you also need to consider what happens when infrastructure fails (say, a Docker registry goes down or a server's raid controller breaks in the middle of the night). Do you have backup registries or servers to migrate to? Is mitigation a manual or automated process? By taking on infrastructure yourself, you need to dive into the world of warranties, uptime Service Level Agreements (SLAs), and other nonsense that takes you away from core product development. And, I can assure you as a former DevOpsian, that supporting failed infrastructure in the middle of the night was my least favorite part of the job!

You can take those DevOps hours back, and do something actually useful with them.

At Prismatic we handle monitoring, patching, and maintenance for you. Our integration runners run in containers within a compute service that scales horizontally. If we need to patch the integration runners, we deploy new patched ones and migrate integration work to them. Then, we retire old runners from the compute pool as they finish their work. You never have to worry about CVEs or SLAs – we take care of infrastructure resiliency and fail-overs for you.

Scaling infrastructure

Your integration may have been designed and tested to handle hundreds of requests per second, but what happens when it starts getting hundreds of thousands? Depending on your infrastructure choice, you may need to deploy a fleet of servers and load balancers to handle the uptick in traffic. Also, your traffic may be variable – it might be the case that at 8:00AM on weekdays your integrations receive thousands of requests, but they sit relatively idle otherwise. Without some sort of cloud-based autoscaling, you could end up with extra resources that you don't use most of the time.

As I mentioned above, Prismatic takes advantage of elastic compute services to handle variable workloads. If your integrations start to receive higher volumes of requests, we simply scale our integration runner fleet, and scale down the fleet when load volumes decrease – scaling is built in to the Prismatic platform and is something we worry about so you don't have to.

Why isn't something like Lambda or Step Functions sufficient?

You might be asking yourself – and many integration engineers I've worked with do at some point – can't I just throw some code into Lambda, or use Step Functions to run my integrations? The answer is both "yes" and "no". Yes, you could. You're a software company; by definition you have a whole team of engineers. If you want to build your own iPaaS on top of another tech stack you certainly can. In my experience, though, a platform like AWS gives you the parts of the car, but you still need to build the car yourself. Things like Lambda and Step Functions might work well for building integrations for internal use – they scale and provide easy deployment, but they don't handle the specific challenges B2B software teams have that come with deploying and running integrations for customers:

- As we found out, Lambda doesn't provide process isolation. So, one customer's custom code could affect another customer's executions. You'd need to publish unique Lambda functions per customer.

- There's not a great customer self-service option for Lambda or Step Functions. So, your engineering team would need to manage configuration and deployment of each integration to each customer.

- You'd need to find some way to handle customer-specific configuration as part of your build-and-deploy pipeline to Lambda.

- Things like logging, alerting, and monitoring are not provided out of the box – you're given a toolkit and you're expected to put together the pieces.

What usually results when companies try to build their own iPaaS is a frail integration system that's held together by duct tape, shell scripts, and Lambda shims.

Additionally, we believe integrations are a company-wide problem. When integration systems are built in-house, they're often only drivable by someone in development. Dev and DevOps shouldn't need to be involved every time an integration needs to be configured and deployed to a customer. Once an integration has been published by your integration builders, anyone from your company (or your customers themselves) should have a simple, straight-forward integration deployment experience.

Building that sort of comprehensive system is a huge project that most companies can't – and shouldn't – carve out the time to undertake.

Conclusion

Building your own infrastructure for integrations is doable, but it sure isn't simple. You need to worry about provisioning and configuring resources, storing configuration, deploying integrations themselves, logging, monitoring, alerting, and a whole bunch more.

My advice to B2B software teams: you could build your own integration infrastructure, but your time is better spent elsewhere.

This post is part of a series. Check out our previous post to learn about other ways Prismatic reduces the integration workload for engineering teams.