When it comes to implementing an embedded iPaaS, I've found that many devs ask the same question: "Can't we just build this ourselves?"

The short answer is, "Yes, you can." The long answer is why I wrote this post.

Whether you are a dev whose company is considering an embedded iPaaS or a product leader preparing for an integration strategy discussion with your team, this post is for you.

You see, when your SaaS needs to build integrations to the other apps your customers use, you essentially have two choices: build everything in-house or reach for a tool such as an embedded iPaaS. As a software company, by definition, you have teams of developers capable of building integrations and the infrastructure and UI you need to build, deploy, and manage them.

But I've noticed something, both from my experience working on integrations as a dev and DevOps engineer in a previous life, and now as a developer advocate helping teams from all over the SaaS world with their integrations.

When building integrations, it's easy to focus on writing the code that moves data from one app to another while overlooking all the pieces of infrastructure that are necessary to keep those integrations running, and the UI you need to build to create a good configuration experience for your customers. And if you do that, you could overlook 80% of what your integrations require.

In fact, I'd go so far as to say that building the integrations themselves isn't the hardest part of the integration problem – it's all of the infrastructure, maintenance, and UI that's especially hard to get right and incredibly time-consuming. So while you could build all of that yourself, should you?

To answer that, let's look at all the often ignored (but none-the-less critical) pieces that it takes to build a B2B SaaS integration environment.

What compute resources will you need?

So, you've written some code that runs in your IDE that moves data between your app and a third party. Awesome! Now, you need to figure out where your code should run in production.

Provisioning resources

You'll need some sort of compute resource that can execute your code. If you deploy to AWS Lambda or another serverless system, you'll need to write some CloudFormation or Terraform templates to provision those serverless resources. If you want to run in a Kubernetes cluster or ECS service, you'll first need to provision that cluster and then build a container pipeline that packages your code into a container with its dependencies. If you want to run on your own bare-metal servers or within VMs or EC2s, you'll need to build installation scripts that ensure your servers have the software necessary to run your code.

Updating code

Once you've provisioned compute resources, you'll need to figure out how to deploy and update your code. Swapping out containers or Lambda definitions works for container or serverless deployments, but in the case of servers/VMs/EC2s, you'll probably need to devise a sophisticated blue/green deployment. You'll also need to ask yourself several questions, like what happens if your integration is executing when a container is replaced: does it wait for an execution to complete, or will the execution terminate mid-way through? Can you readily roll back a deployment to a previous version if you detect issues? Can you quickly deploy different versions to different customers?

Scaling infrastructure

When data flows between apps, it often comes in bursts. A customer may close out 10,000 opportunities in Salesforce in bulk, and your integration needs to be ready to handle 10,000 webhook requests in a short period when it received none in the minutes prior. As you build out compute resources, you'll need to ensure your infrastructure can handle large bursts of requests. At the same time, third-party apps can be misconfigured and effectively DDoS your webhook endpoints. In those situations, you may need to configure WAF or API gateway rules to protect you from scaling compute resources unnecessarily and tune those as required.

How will you handle integration activation UI for customers?

While you may hand-hold your first few customers through integration deployments, you'll eventually want to productize your integrations and make them something your customers can activate for themselves.

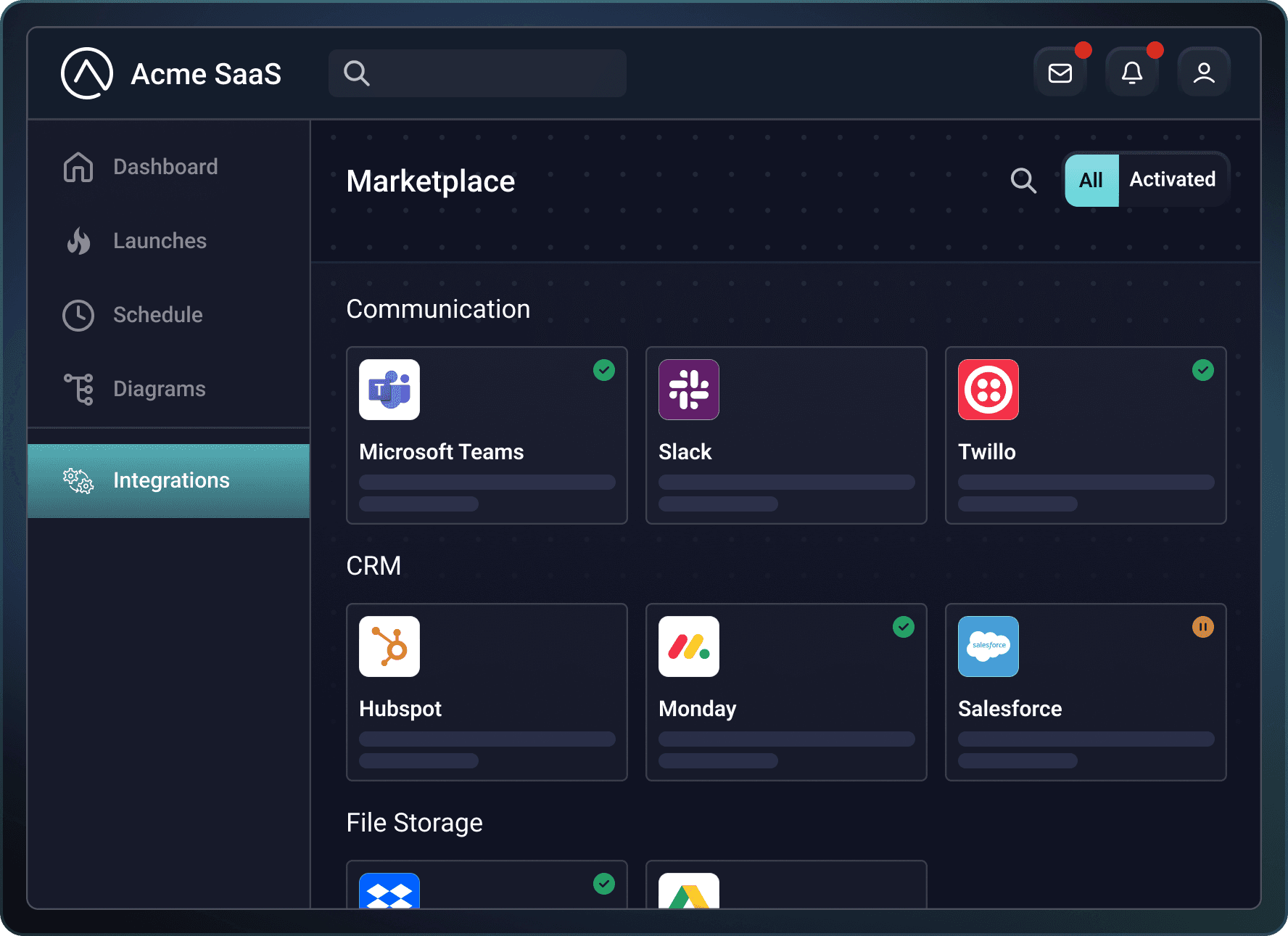

Integration marketplace

Your customers will expect to be able to manage integrations somewhere within your app. While it's not a huge task for a frontend developer to create a simple listview screen of your integrations, you will need to think about what happens as you add additional integrations. Will you need to involve your frontend team whenever you add or remove an integration? Or will your frontend team need to source your list of integrations from some external system? Who maintains which integrations are shown? Can you show different sets of integrations to customers depending on their needs?

Integration configuration experience

Your customers will need to provide you, at minimum, with some way to connect to a third-party app on their behalf. But, usually, the integration configuration experience is more involved. Your customer may want to select a Slack channel from a dynamically populated dropdown menu or perform a data mapping between Salesforce custom fields and fields in your app. Will your frontend developers need to write custom code for each configuration experience, or will they build a generalized configuration solution that can display any configuration experience that an integration builder can create?

On the backend, your team will need to keep track of integration configurations for each of your customers. Your integration code will need to have access to the configurations it needs, something that likely requires building new APIs on your backend. Depending on your implementation process, your backend team may need to be involved with each new integration your integration team builds.

How will you manage OAuth 2.0?

The OAuth 2.0 auth code flow is one of the most common authorization mechanisms used in integrations. With OAuth 2.0, users can click a single button in your app, consent to provide your app with a set of permissions in a third-party app, and then return to your app to continue their integration activation process. To the user, the process appears simple, but to a developer, there is infrastructure you need to stand up to support OAuth.

OAuth 2.0 callbacks

When a user returns to your app after interacting with a third-party app's consent screen, the user needs to return to a predetermined "callback URL." Your callback URL will need to determine which user and which integration the OAuth auth code is for using a state property that your app will track. So, you'll need infrastructure for both generating OAuth authorize URLs and for receiving auth codes from users. Additionally, your OAuth callback URL will need to be able to exchange auth codes for access tokens with these third-party apps.

Token refreshes

OAuth tokens are often designed to expire and can live anywhere from a few minutes to a few days. You will need to develop an OAuth service that periodically refreshes OAuth tokens for all your customers' deployed integrations before they expire and will need to alert you (or your customer) if token refresh fails.

What will the webhook infrastructure look like?

When moving data between your app and the other apps your customers use, event-driven integrations are ideal. When an event occurs in the third-party app (for example, a Lead is created in Salesforce), it can notify your app via a webhook payload that the event occurred.

Webhook processing

To support incoming webhooks, you will need to build a scalable service that can receive and process the webhooks. The service will need to determine (either using unique endpoints or via some other mechanism) which customer and integration a webhook payload is for. Then, it will need to dispatch the request to the appropriate compute resource. If compute resources are not scaled properly, your webhook service will need to be able to queue up requests, so you'll need to support some queuing service in your infrastructure.

Retry logic

If the compute resource fails to process the webhook request fully, you will need to build some sort of retry logic to attempt to process the payload again. If you encounter an edge case with your webhook request and need to deploy new code to fix the edge case, your infrastructure should be configurable to hold on to the webhook payload and retry the payload again when the corrected code is deployed.

How will you manage event scheduling?

Some third-party apps do not support webhooks. In those cases, running integration code on a regular cadence can help keep your app and a third-party app in sync. You will need to build a service that invokes your customers' integrations on a schedule. You may need to build configuration options in your frontend and storage in your backend to support custom schedules specified by your customers.

What data will you log and how?

If you've addressed everything above, you have built infrastructure to run your integrations, an OAuth 2.0 service, a webhook service, and an event scheduler. All those services will have logs, and those logs need to go somewhere. Most companies leverage a log provider like DataDog, NewRelic, Splunk, etc. You will need to ensure that the infrastructure you build captures logs from your runners and supporting services. That usually involves installing agents from your log provider on your compute resources and keeping those agents up to date.

What will you need for monitoring and alerting?

You'll want to know when something goes wrong. It's better to detect issues before your customer reports that data isn't flowing between your app and a third-party app. To do that, you will need to build an alerting service that detects error scenarios in your integrations and notifies you when those errors occur, with direct links to related log lines.

You can often leverage monitoring features in your log provider to detect error states, but you may need to tune those monitors to detect failures accurately. Depending on your implementation and needs, you may need to build an additional monitoring and alerting service that sends alerts or logs to your team or customer when an integration isn't behaving as expected.

How will you test and version?

It's essential to test integrations in a sandboxed environment that resembles production as closely as possible. While lots of code can be tested in an IDE through unit tests, environment differences between a local environment and a production environment can cause unexpected errors.

As you build your integrations, you'll likely need to build some infrastructure that allows you to deploy new versions of your integration quickly to a prod-like environment for QA and UAT. Due to the iterative nature of integrations, it's helpful to deploy and test new code quickly without requiring a long release cycle. That is, it's helpful to deploy integrations out-of-band with the rest of your product.

How will you manage the security?

Integrations involve storing credentials to third-party apps and often involve moving PII or other sensitive information from one app to another. Your team will need to build infrastructure to encrypt third-party credentials in transit and at rest and architect data access so that integrations can only access credentials and configurations pertinent to the current customer.

How will you monitor the infrastructure, know what normal vs abnormal looks like, detect potential incidents, respond to incidents, and ensure your customer data isn't breached?

Depending on who your customers are and what data you process, you may be subject to HIPAA, DPA, GDPR, CJIS, or other regulations. Your team will need to make sure that the integration infrastructure you build adheres to those regulations. These regulations often require certain things to be updated or performed annually, or whenever there is a change in the infrastructure.

How will you calculate tool and maintenance costs?

The infrastructure you build to support integrations, webhooks, OAuth 2.0, user configuration, logging, monitoring, and alerting isn't free. Your team will need to research which compute resources are appropriate for you and your needs. Additionally, your team will probably need to pull in queues for webhooks and inter-service messaging and databases for persisting data between executions and storing configuration.

In terms of people-cost, it's vital that integration infrastructure stays up and running. Your team will likely need to designate an on-call DevOps engineer who can respond to alerts and address infrastructure issues as they arise.

An integration is so much more than it first appears

You are a software company, and you have a smart team of engineers capable of building the infrastructure necessary to run integrations.

But all that effort comes at a cost. It's easy to concentrate on the integration code and underestimate the supporting infrastructure and UI that you'll require.

As you weigh your options and choose whether to build integration infrastructure yourself or reach for an embedded iPaaS, consider the full extent of what it takes to build – not to mention maintain – robust runner, OAuth 2.0, configuration, webhook, event scheduling, logging, monitoring, and alerting infrastructure.

If you have questions about integration infrastructure or anything else regarding integrations and embedded iPaaS, contact us.